Best Web Scraping Tools in 2025: Complete Comparison Guide

By The Visualping Team

Updated November 3, 2025

Best Web Scraping Tools in 2025: Complete Comparison Guide

Last Updated: November 2025

Introduction

Web scraping tools help you tap into all the information floating around the internet. Think of web scraping tools like your personal data assistants. They automatically grab information from websites and turn messy HTML code into clean, organized data you can actually use.

MarketsandMarkets research shows the web scraping market is growing fast through 2025. Why? More and more companies need real-time data to stay competitive.

But here's the thing. With so many scraping tools out there, picking the right one can feel overwhelming. We'll walk through the best options available in 2025, breaking down what each tool does well, what it costs, and when it makes the most sense for your needs.

Quick heads up on the legal stuff: Before you start scraping, make sure you're playing by the rules. Check website terms of service, respect robots.txt files, and stay compliant with data protection laws like GDPR and CCPA. The Computer Fraud and Abuse Act (CFAA) sets the ground rules in the U.S., and similar laws exist worldwide.

What is Web Scraping?

Web scraping is basically teaching software to collect information from websites automatically. Instead of you copying and pasting data by hand (yawn), these tools do the heavy lifting. They can process thousands of pages in minutes and pull out exactly what you need. Then they package it up in formats like CSV, JSON, or even send it straight to your database.

Modern scraping tools are pretty clever. Here's what they can do:

Grab specific data: Want just the prices from an e-commerce site? Or contact info from a business directory? Scrapers can target exactly what you need using CSS or XPath selectors.

Work on autopilot: Set it and forget it. Schedule your scraper to run daily, weekly, or whenever you want. Fresh data without lifting a finger.

Make sense of messy code: Raw HTML is ugly. Scrapers transform it into neat spreadsheets or databases that you can actually work with.

Handle serious volume: Whether you need data from one page or a million, good scrapers can handle the scale.

Why Businesses Need Web Scraping Tools

Web scraping isnt just about collecting data efficiently and at scale; its about actioning that data and drawing out intelligence from it.

Market Intelligence and Competitive Monitoring

Want to know what your competitors are up to? This is where web scraping becomes essential. Recent research published in the Journal of Business Research reveals something fascinating: companies actively monitor competitors' strategic moves and market reactions as competitive signals. The study found that firms in competitive markets closely track rivals' announcements, acquisitions, and stock market reactions to make faster strategic decisions.

Here's what real executives say about competitive monitoring:

"In this fiercely competitive and fast-changing market, as soon as a competitor gains new advantages through an acquisition, we're at risk. It's a dangerous signal; it shows our edge is under threat, and we must react swiftly or face erosion of market share, or worse, being edged out entirely." - Head of Brand and PR, BYD

"We closely monitor our rivals' M&A moves and remain on high alert. An acquisition can mean they are acquiring advanced technologies or unique resources we lack, potentially outpacing us in innovation and market position." - Head of Marketing, Geely

This isn't just theory. Companies use web scraping to automatically track competitor pricing, new product launches, market positioning, press releases, and strategic announcements in real-time.

Lead Generation

Finding potential customers is hard work. Web scraping makes it easier by pulling contact information, company details, and prospect data from directories and professional networks. Build your sales pipeline while you sleep.

Price Monitoring

If you're in e-commerce, this is a game changer. Track competitor prices across thousands of products automatically. Why does this matter? McKinsey research found that getting your pricing right can boost margins by 2 to 7%.

Content Aggregation

Media companies and content platforms use scraping to pull together news, reviews, and social media data. It's how they provide comprehensive coverage without a team of hundreds.

Research & Analysis

Academics, data scientists, and market researchers use scraping to build datasets for studies, train AI models, or analyze market trends at scale.

Without automation, these tasks take forever. A market research project that might take weeks by hand? A web scraper knocks it out in hours. Plus, you get better accuracy and consistency.

How to Choose the Right Web Scraping Tool

Picking a web scraper isn't one-size-fits-all. Here's what you need to think about:

1. Technical Skill Level

Be honest about your team's technical chops.

No-Code Tools: These have visual interfaces where you just point and click to select what you want. Perfect for marketers, analysts, and business folks who don't code.

Low-Code Tools: You get a visual interface but can add custom scripts when things get tricky. Good middle ground if someone on your team knows a bit of code.

Developer Tools: These are APIs and libraries that require actual programming. Maximum flexibility, but you need technical skills to use them.

2. Scale Requirements

Think about both now and later. How many pages do you need to scrape? Hundreds? Thousands? Millions? Are you doing this once or continuously? How much data are we talking about here?

3. Website Complexity

Modern websites can be surprisingly tricky to scrape.

JavaScript-heavy sites: Some sites load content dynamically with JavaScript. You need a scraper that can render these pages properly.

Login walls: Getting data behind authentication takes special handling.

Anti-bot measures: CAPTCHAs, rate limiting, you name it. Some sites really don't want bots around.

Complex layouts: Dynamic page structures need smart scraping logic to adapt.

4. Integration Requirements

Where does your scraped data need to go?

Maybe you need API access to feed data directly into your application. Or Google Sheets integration for easy analysis. Perhaps database connections for your data warehouse. Or webhook notifications for real-time alerts.

5. Budget Considerations

Web scraping tools range from totally free to thousands per month. Find the sweet spot between what you need and what you can spend. Think about:

- Per-page pricing versus unlimited models

- Support and maintenance costs

- Time spent building custom solutions

- The cost of doing it manually instead

Best Web Scraping Tools for 2025: Detailed Comparison

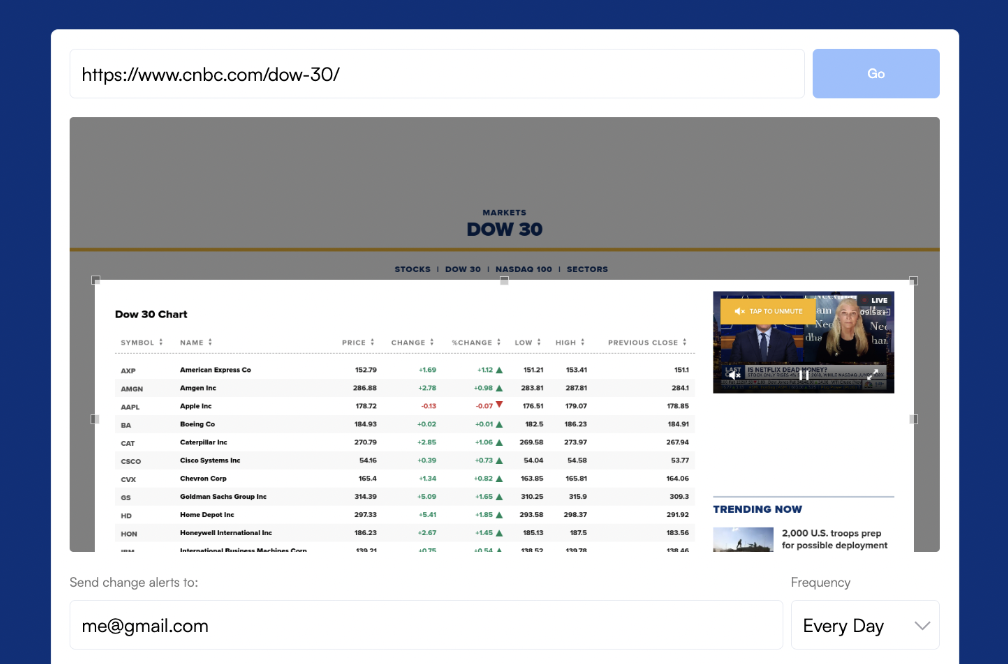

1. Visualping

What it does best: Visual web scraping and change detection

Perfect for: Business teams who want automation without the tech headaches

Visualping is the leader when it comes to making web scraping and website change monitoring simple. While other tools require coding skills or complex setup, Visualping gives you an easy interface that works on pretty much any website. Non-technical teams love it.

.

.

What you can do with it:

- Point and click: Just highlight the parts of a page you want to extract. No code needed.

- Set it and forget it: Schedule scraping jobs to run hourly, daily, or on your own custom schedule.

- Instant spreadsheets: Data flows automatically into Google Sheets. Start analyzing right away.

- Smart filtering: Only grab what matters by filtering for specific keywords.

- API access: Plug scraped data straight into your apps through a REST API.

- Handles modern websites: Works with JavaScript-heavy sites that load content dynamically.

- Bulk processing: Scrape multiple URLs at the same time.

Real-world examples:

- E-commerce teams track competitor prices across thousands of products

- Investment analysts monitor company press releases and SEC filings

- Content marketers gather industry news and trends

- Sales teams extract leads from business directories

- Real estate pros keep tabs on property listings

What it costs: Business plans start at $100/month and scale up for enterprise needs. You get generous page quotas and unlimited scheduled jobs even at basic tiers.

What's great:

- Anyone can use it, but it's powerful enough for advanced users

- Tons of integration options including webhooks and API

- Handles JavaScript-heavy modern websites like a champ

- Customer support actually helps you get set up

What to know:

- If you need to scrape millions of pages, you might need a custom solution

- Some complex authentication scenarios might need help from support

Getting started: Check out Visualping's business features to explore what it can do and start a free trial.

2. Oxylabs

What it does best: Enterprise data collection at massive scale

Perfect for: Large operations needing proxy networks and bulletproof reliability

Oxylabs is built for the big leagues. They provide enterprise-focused scraping APIs backed by a huge proxy network. If you're running large-scale data collection and need high success rates across different geographies, this is your tool.

What you can do with it:

- Ready-to-use API: Pre-built solutions for common scraping needs

- Massive proxy network: Over 100 million IPs to avoid getting blocked

- Global reach: Collect location-specific data from any country

- Full browser automation: Handles dynamic sites with JavaScript

- CAPTCHA solving: Automatically deals with common challenges

When to use it:

- Fraud detection needing real-time data verification

- Compliance monitoring across global markets

- Large-scale market research projects

- Ad verification and brand protection

What it costs: Basic plans start at $49/month. Enterprise pricing scales with how many requests you make. You can try it free for a week.

What's great:

- That huge proxy network means your scraping almost always works

- Enterprise-grade reliability and uptime

- Advanced features for really complex scenarios

What to know:

- Steeper learning curve. You need technical skills.

- More expensive than simpler tools

- Probably overkill if you just need basic scraping

Learn more: Oxylabs API Documentation

3. Smartproxy

.

.

What it does best: Proxy-based scraping for SEO pros

Perfect for: SEO professionals and agencies needing diverse proxy options

Smartproxy combines proxy services with scraping APIs. It's especially popular with SEO professionals and digital marketing agencies who need to collect search engine data and social media insights.

What you can do with it:

- SERP scraping: Pull data from Google, Bing, and other search engines

- E-commerce scraping: Pre-built tools for major shopping platforms

- Social media data: Collect public social data

- 65 million+ proxies: Huge network of residential, datacenter, and mobile proxies

- Global coverage: 195+ locations for localized data

Best uses:

- SEO rank tracking and keyword research

- Social media monitoring and sentiment analysis

- E-commerce price intelligence

- Ad verification across different locations

What it costs: Starts at $50/month with a 3,000 request free trial. Pricing goes up based on how many requests you make and which proxy types you need.

What's great:

- Excellent for SEO and marketing work

- Competitive pricing for proxy access

- Good coverage of major platforms with ready-made scrapers

What to know:

- Mainly focused on SEO and marketing uses

- Custom scraping needs more setup work

- API-first design means you need technical skills to implement

Documentation: Smartproxy Help Center

4. Diffbot

.

.

What it does best: AI-powered content understanding

Perfect for: Teams needing smart content classification and entity extraction

Diffbot takes a different approach. Instead of just parsing HTML, their AI actually understands content. Machine learning models automatically identify and extract structured data from articles, products, discussions, and more.

What you can do with it:

- Smart classification: AI figures out content type (article, product, etc.) automatically

- Entity recognition: Pulls out specific things like people, companies, prices

- Knowledge graphs: Build structured knowledge bases from web data

- Analyze API: Automatically detects page types and extracts appropriately

- Language understanding: Gets content context and relationships

Best uses:

- Competitive intelligence and market analysis

- Content aggregation for media platforms

- Building knowledge bases for AI and machine learning projects

- Sentiment analysis from product reviews

- Research needing semantic understanding of content

What it costs: Plans start at $299/month, so it's definitely premium pricing. They offer a 14-day free trial.

What's great:

- AI-driven extraction needs less fiddling with settings

- Excellent for messy content that needs understanding

- Powerful for building knowledge graphs

- Handles different content formats across sites really well

What to know:

- Premium pricing puts it out of reach for smaller teams

- Might be overkill for simple structured data

- You need technical skills to integrate it

Technical docs: Diffbot API Overview

5. ParseHub

What it does best: Desktop scraping application

Perfect for: Budget-conscious users needing visual scraping that runs locally

ParseHub is a desktop app you download and run on your computer. It has point-and-click scraping, and the free tier makes it accessible for individuals, researchers, and small projects without much budget.

What you can do with it:

- Visual builder: Point-and-click interface for building scrapers

- Complex navigation: Handle pagination, dropdowns, and tabs

- Export options: Download as CSV, JSON, or Excel

- Image scraping: Extract and download images and files

- Scheduled runs: Automate recurring scraping jobs

Who uses it:

- Graduate students and academic researchers

- Freelance data analysts and consultants

- Startups with tight budgets

- Individual developers building datasets

What it costs:

- Free plan: 200 pages per run, 40 minutes per run, 5 public projects

- Paid plans: Higher tiers for more limits and private projects

What's great:

- Free tier lets you experiment and learn

- Desktop app gives you local control

- Good documentation and tutorials

- Active community for support

What to know:

- Free tier restrictions make business use tough

- Desktop-based setup is less convenient than cloud solutions

- Advanced features need paid plans

- May struggle with heavily protected sites

Get started: ParseHub Documentation

Web Scraping Tool Comparison Matrix

| Feature | Visualping | Oxylabs | Smartproxy | Diffbot | ParseHub |

|---|---|---|---|---|---|

| Ease of Use | ★★★★★ | ★★★☆☆ | ★★★☆☆ | ★★★☆☆ | ★★★★☆ |

| No-Code Interface | Yes | No | Limited | No | Yes |

| JavaScript Rendering | Yes | Yes | Yes | Yes | Yes |

| API Access | Yes | Yes | Yes | Yes | Limited |

| Proxy Network | Included | Extensive | Extensive | Included | Limited |

| Free Tier | Trial | Trial | Trial | Trial | Yes |

| Best For | Business Users | Enterprises | SEO/Marketing | AI Applications | Students/Researchers |

| Learning Curve | Low | High | Medium | Medium | Low |

| Support Quality | Excellent | Good | Good | Good | Community |

Advanced Web Scraping Considerations

Legal and Ethical Guidelines

Let's talk about doing web scraping the right way. You need to follow both legal rules and ethical standards.

Respect robots.txt: This file tells you which parts of a site allow automated access. Always check it and follow what it says. Here's Google's guide to robots.txt if you want to learn more.

Read the terms of service: Many websites explicitly ban automated data collection in their terms. Breaking these rules could get you in legal trouble under contract law or the CFAA.

Don't overwhelm servers: Put reasonable delays between your requests. Most responsible scrapers limit themselves to one or two requests per second. Nobody likes a site hog.

Protect personal data: If you're collecting information about people, make sure you follow GDPR, CCPA, and other privacy laws. Think about data minimization. Only collect what you actually need.

Give credit: When you use scraped data publicly, consider crediting the original sources where it makes sense.

Want to dig deeper? Check out the hiQ Labs vs. LinkedIn case which set important rules about scraping public web data.

Technical Implementation Best Practices

Handle errors gracefully: Build in error handling for connection failures, timeouts, and unexpected page layouts. Production scrapers should handle problems without losing data.

Validate your data: Always double-check that extracted data is complete and accurate. Set up checks for expected data types, formats, and ranges.

Set up monitoring: Use monitoring systems like Visualping to catch when websites change structure and potentially break your scraper. Tools like Visualping can alert you to page changes so you know when to update your scraper.

Store data securely: Plan for organized, secure storage. Think about how long you'll keep data, backup procedures, and who should have access.

Plan for growth: Design your scrapers to scale as your needs grow. Cloud-based solutions generally scale better than running things locally.

Common Challenges and Solutions

Challenge: Websites change all the time and break my scraper.

Solution: Use robust selectors (IDs work better than complex CSS paths). Set up change detection monitoring. Have backup selectors ready to go.

Challenge: Sites block my automated access.

Solution: Use reputable proxy services. Implement reasonable rate limiting. Rotate user agents. Pick scraping tools with built-in anti-blocking features.

Challenge: I can't access JavaScript-rendered content.

Solution: Use tools with headless browser capabilities or JavaScript rendering. Visualping, Oxylabs, and Smartproxy all handle this.

Challenge: Large-scale scraping gets expensive fast.

Solution: Optimize to scrape only what you need. Cache pages you access frequently. Schedule jobs during off-peak times when possible.

Alternative Approaches to Web Scraping

Web scraping is powerful, but it's not always the best answer. Consider these alternatives when they fit better:

Official APIs

Many platforms offer official APIs that give you structured data access with guaranteed stability and clear legal standing. Always check for public APIs before building scrapers for major platforms.

Data Providers

Third-party vendors sell aggregated datasets from various sources. You're trading money for convenience and legal clarity.

RSS Feeds

For monitoring content updates, RSS feeds provide a legitimate, standardized way to track changes. Many sites still offer RSS for news, blogs, and updates.

Website Change Detection

Sometimes you just need to know when content changes, not extract massive datasets. Specialized change detection tools offer a more efficient approach than full-scale scraping.

Conclusion: Choosing Your Web Scraping Solution

The right web scraping tool depends on what you actually need. Here's the quick guide:

For most business teams: Visualping hits the sweet spot between power and ease of use. The visual interface, great integrations, and ability to handle different website types make it work for most business needs. Plus, you don't need to be technical to use it.

For massive enterprise operations: Oxylabs or Smartproxy give you the infrastructure, proxy networks, and reliability you need for large-scale projects where success rates and performance really matter.

For AI and machine learning work: Diffbot's intelligent content extraction and knowledge graph features are perfect for teams building AI systems that need to understand web content semantically.

For learning on a budget: ParseHub's free tier lets students, researchers, and individuals explore web scraping without spending money.

Web scraping keeps evolving with new technology and changing legal rules. The best implementations balance technical capability, legal compliance, and ethical responsibility while delivering real business value.

Whether you need competitive intelligence, price monitoring, lead generation, or market research, modern web scraping tools make data extraction accessible and practical.

Ready to get started? Try Visualping with a free trial, or talk to our team for help implementing web scraping for your specific situation.

Frequently Asked Questions

Is web scraping legal? It depends on what you're scraping, how you're doing it, and what you do with the data. Scraping publicly available data is generally fine, but you need to respect copyright, terms of service, and privacy laws. When in doubt, talk to a lawyer.

Can I scrape any website? Technically, you can scrape most websites. But should you? That's the real question. Always check robots.txt, terms of service, and relevant laws before you start.

How often should I run scraping jobs? It depends on how fresh your data needs to be and what the target website can handle. For most business uses, daily or weekly scraping gives you current data while being respectful of server resources.

What data formats do web scrapers support? Most modern scrapers can export to CSV, JSON, XML, and Excel. Many can also integrate directly with databases or apps through APIs. Visualping offers Google Sheets integration for seamless data flow.

Do I need programming skills to use web scrapers? Not necessarily. Visual tools like Visualping and ParseHub don't require coding. That said, technical knowledge helps with complex scenarios and custom integrations.

How do I handle website changes that break my scraper? Set up monitoring to catch when pages change structure. Tools like Visualping's change detection can alert you when target pages get modified, so you know to update your scraper.

Disclosure: This article talks about Visualping, which is our product, alongside competing solutions. We've worked hard to provide objective comparisons based on actual tool capabilities and real-world applications.

Want to monitor web changes that impact your business?

Sign up with Visualping to get alerted of important updates, from anywhere online.

The Visualping Team

This guide is provided by Visualping's data extraction team. We have over 10 years of combined experience in web scraping, data engineering, and business intelligence. Our team regularly evaluates web scraping tools and best practices to help businesses make smart technology decisions.